Event-Driven Caching

How Event-Driven Architecture Changes Our Cache Strategy

Purpose of Caching

As a Consumer of your shiny new service, I want my answers Now. Real-time has become the de facto standard. It's no longer good enough to take our time digging through millions of possibilities and return ~500ms later. Don't believe, either, that this only applies to user interactivity.

Caching allows an application to retrieve required data from an in-memory data store. While quite faster than digging within a more persistent store, the in-memory call is not free.

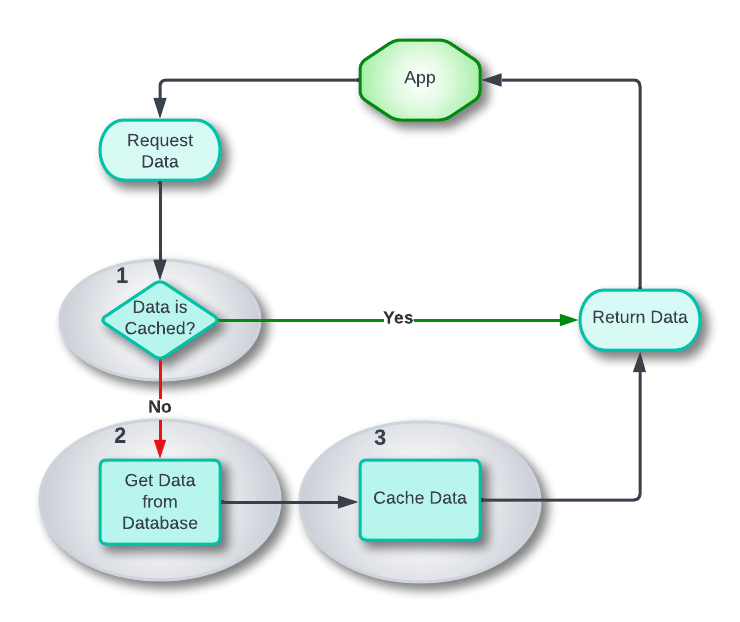

Cache On-Demand

Typically, caches are remote with respect to the application itself. Even if local memory stores the cache, each call to the store increases the contention. The typical three-step process: first checks the cache and can return early, otherwise, the second step loads data out of the database, and third we cache the data for future use with a heuristic Time to Live (TTL). This On-Demand style is common among CRUD apps with a cache layer on top of a database-first mentality.

Cache On-Demand can encompass Cache Aside, Read/Write Through, and Write Behind, the patterns follow largely the same process. The owner of each step may vary or be done outside a hotter path. The key, though, is that each strategy requires Demand First and a Database Source of Truth (SoT).

Cache Ahead

Cache Ahead is all about Knowledge Management. Cache Ahead is more than simply Write Through. When we drive toward a realization of real-time, placing our slowest source of truth closest to our return is inefficient. In other words, even with a cache layer placed on top of a database, we've chosen the database as the true source of our data. We've relegated the cache to be little more than a band-aid on our architecture.

We need to take a better approach and address: Writing, Lifetime, and Eviction of cache data. Let's define this strategy by first dropping our CRUD preconceptions and viewing the problem through an Event-Driven lens.

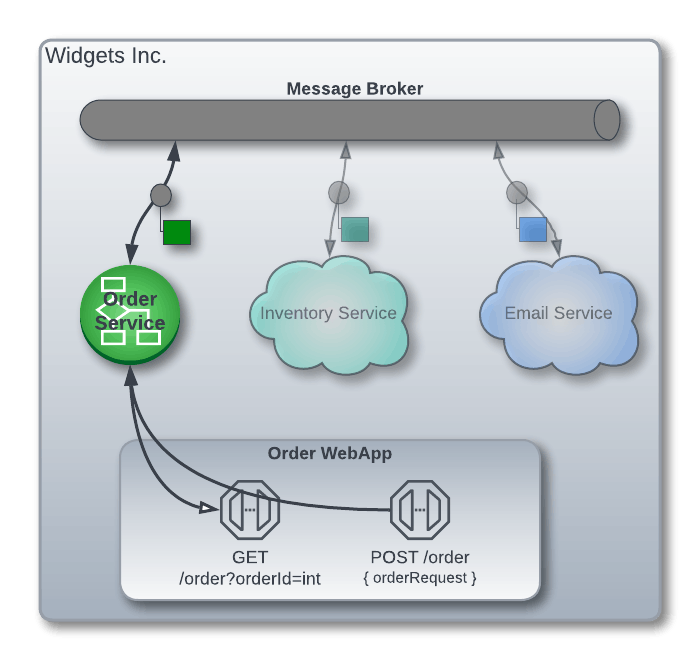

Widgets Inc.

To stage an exploration of Cache Ahead we'll use the e-commerce unicorn Widgets Inc. The backend we're working with is composed of the Order Service, an Inventory Service, and Emal Service.

Specifically, let's explore the Order Service. Our service is such that it's both listening to Events and responding to HTTP Requests. I've placed the cache first in line with the database updated either via the App itself or in a Write-Through from our cache. We'll see Cache Ahead laid out as it would fulfill the following requirements of the Order Service

Validate an

OrderRequesthas Products in stockPermit the ad-hoc querying of current Orders

Maintain the Customers' Order

Order Request - New Order

Keeping our Knowledge management perspective, a new Order must be validated and, if successful, persisted. This is the point in time when new knowledge enters our system.

We can assume that inventory has been maintained by our Inventory Service. We can confidently assume that, upon Order validation, we will hit the Inventory Service cache when checking stock levels. With a validated Order, we'll write to our cache.

This was relatively straightforward, much the same as a Write-Through wherein our database is backing up our cache. Note, the confident Inventory cache hit foreshadows how we'll Always have a Positive cache hit, i.e. if it's not in the cache it's not the database, no need to check, and the database is no longer an SoT.

Ad-Hoc Order Query

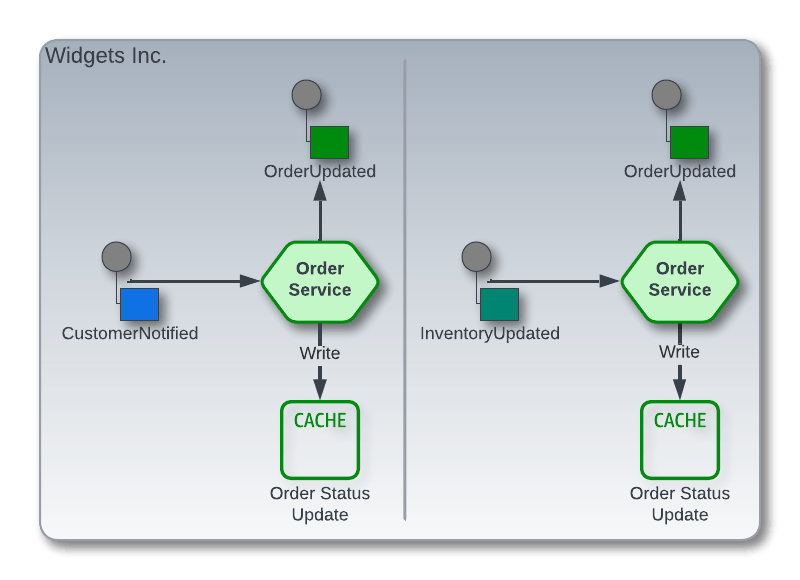

Permitting querying of any Order entails maintaining the Order during its active lifetime. Let's consider two external events that could occur affecting our Order: CustomerNotified and InventoryUpdated .

syntax = "proto3";

message CustomerNotified {

string MessageId = 1;

string CausationId = 2;

string CustomerId = 3;

Kind Kind = 4;

enum Kind {

Confirmation = 1;

Cancellation = 2;

Backordered = 3;

Delivered = 4;

}

}

message InventoryUpdated {

string MessageId = 1;

string CausationId = 2;

string ProductId = 3;

int32 Count = 4;

}

Each message causes a State Change to our Order, we maintain our Owned Domain perspective and emit that an Order has been updated via an OrderUpdated event. The Order Service will not drive downstream events but simply state its own actions. In this way we maintain the current state of the Order, keeping the cache always the SoT.

We could have seen a variety of scenarios:

Confirmation⇒Order is confirmedCancelleation⇒ Order was canceledInventoryUpdated⇒ Business logic could drive aBackordered

Each of these cases would/could cause an OrderUpdated event. For instance, if a product becomes Backordered the Customer should be notified resulting in a CustomerNotified | Backordered event.

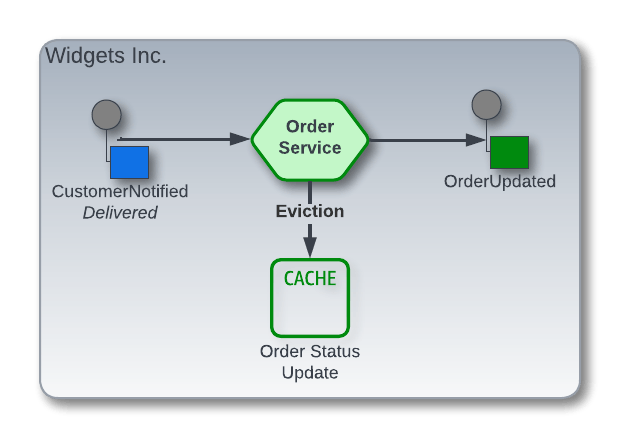

Maintaining the Order - Eviction

So far, we haven't discussed anything about a Time to Live (TTL) or Eviction Policy. Keeping in mind that our objective of Cache Ahead is such that our cache is the primary Source of Truth we want to avoid any form of database fallback. The hard persistent store in this case is better suited as an Event Sourcing store and/or disaster backup, i.e. not an operational store.

The first scary thought is usually that this implies an unbound cache lifetime and bloated cache size, with the cloud spend associated. However, consider the On-Demand cache strategy against Cache Ahead in our Order Service context. If there's no or little demand for current Orders they're evicted from the cache but in the same breath ask; Does that make sense in the use case? In other words; Why are active Orders not interesting enough to remain cached, and if so can we be comfortable with little more than a best guess heuristic TTL?

Business Eviction

Cache Ahead is distinctly intended for an Event-Driven Architecture (EDA). Within our EDA and Order Service context; an Order has a real-world business defined Operational lifetime. That's what determines just how interesting our Order is and determines its lifetime within the cache.

Our particular Order Service context can easily highlight CustomerNotified | Delivered as an End of Life (EoL) of the Order. Surely, we could have a Delivery Service, or live beyond via a 7-Day Return Policy, however;

The key takeaway is that EoL is an event originating from the real life business context and our architecture reflects that reality.

That's a wrap

Cache Ahead is an Event-Driven caching strategy intended to meet the real-time needs of modern businesses. Oriented towards reflecting our real business operations and requirements. Databases don't go anywhere, but they begin to take a backseat operationally and serve analytics, backup, and recovery purposes.

What are your thoughts? What are the nuances I glossed over? Hit the comments and we'll chat!